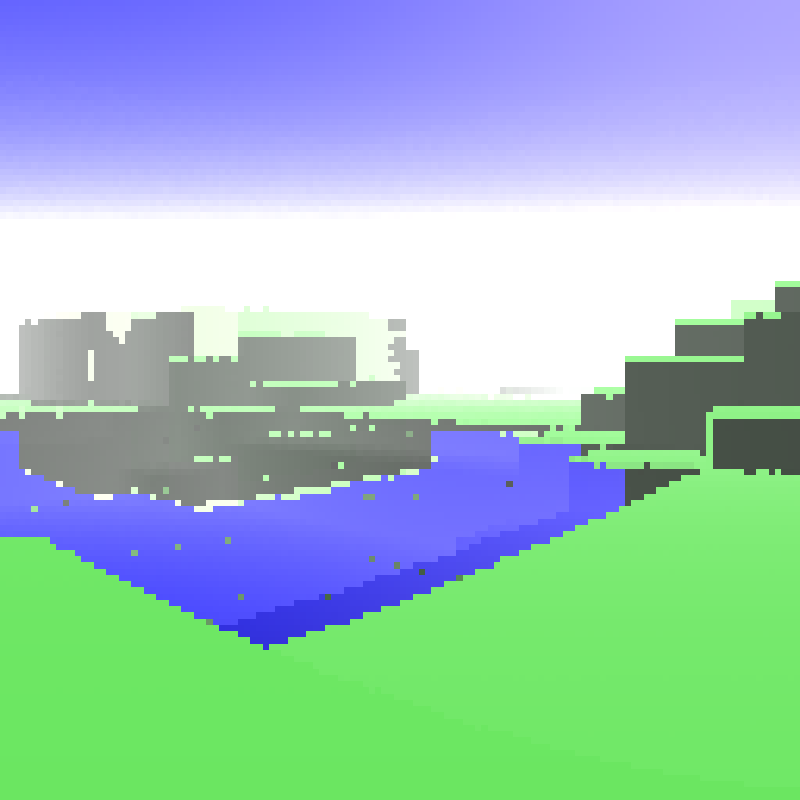

Raytraced water

This project started out life as an attempt to update an old water simulation game I wrote for a top down perspective. I wanted to reimagine it in 3D which ultimately span out into two projects.

- Simulating water-soil flow in 3D in real time

- Rendering the dynamic soil in a nice way

The simulation stuff ended up being very hard as it turned out the be effectively state of the art in soil simulation - so I partitioned the project and tried some rendering that can handle volumetric fluids.

Rays and raster

Most modern graphics are raster based, they reform all the geometry into triangles, transform them and then paint them onto the screen in 2D. Raster graphics turned out to be extremely easy to parallelise and became standard. Raytracing and raymarching are a more intuitive way of drawing. Send out rays from the camera and see how they bounce around the world space and calculate the light hitting an imaginary "camera".

Raymarching

Raymarching is one way for evaluating the paths of light moving through a scene. In raymarching we simply step our light in a direction over small finite distances and at each step evaluate whether we have intersected an object. Raymarching allows for some extremely easy and interesting volumetric behaviours that I wanted to capture in the water model, where its more efficient cousin raytracing. Raymarching allows for easy refracting and density based light decay, it also means that you can simplify interactions into simple intersection Yes/No form (with something like an signed distance function [SDF]).

Implementation

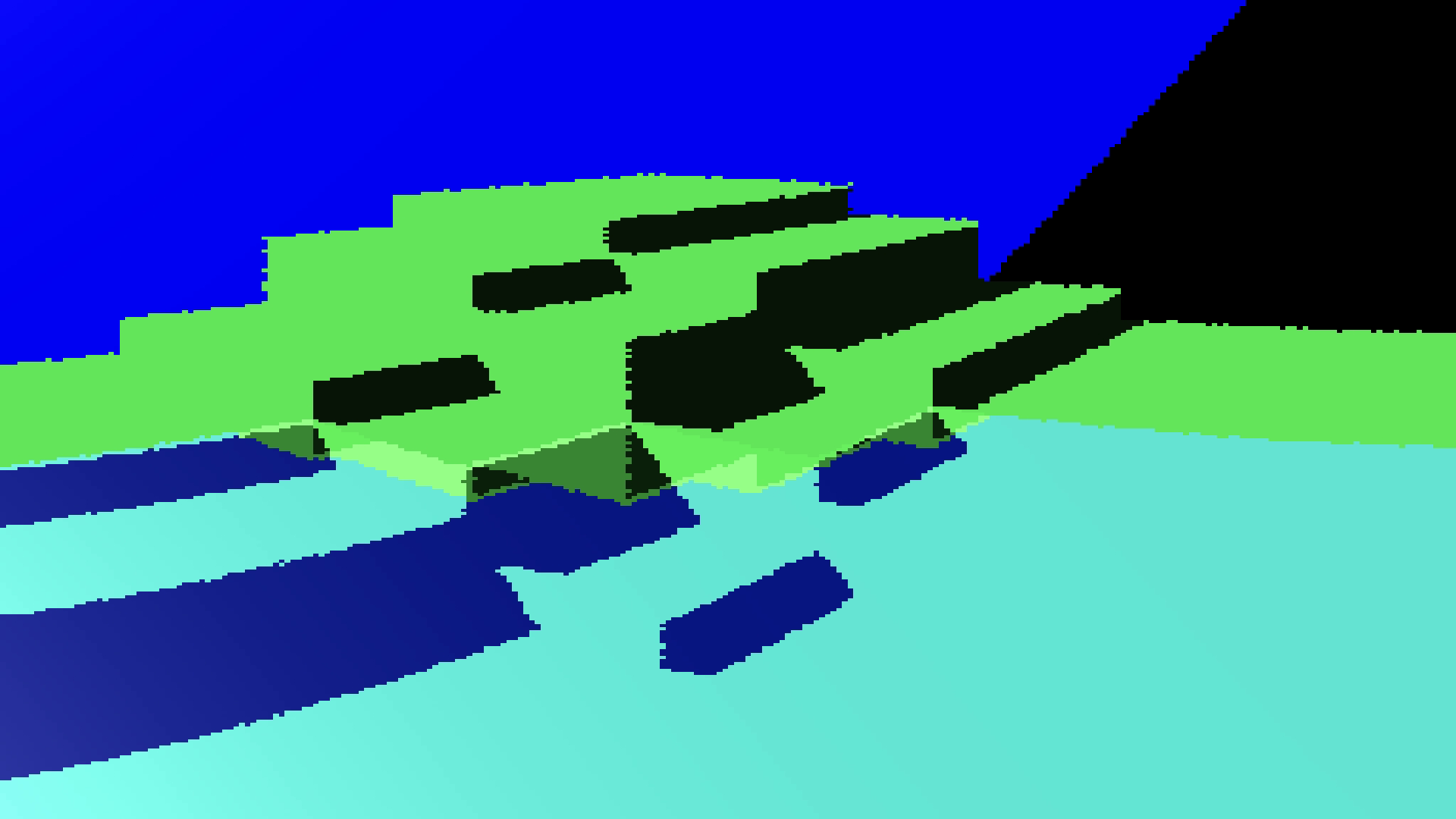

The world is a simple voxel world that is dynamically chunked and loaded onto the GPU piecemeal. Raymarching/raytracing hybrid is utilised for speedup i.e. a basic form of variable ray step sizes.

The core code is in one 400 line GLSL file.

Using GLSL compute shaders allows for better synchronising and rendering ability than a hybrid OpenGL-OpenCL or even OpenGL-CUDA compute scheme.

A chunked voxel set is stored on the CPU, and then a 5x5 square of voxel data is streamed onto the GPU for rendering. To improve performance the CPU should store a copy of the GPU data, and only send updated block information, or swap chunks when they change visibility but that was not implemented.

For each pixel, a ray direction is generated and slightly dithered using a random texture. We check if the ray intersects a block, and if it is solid we then ray march for lighting. The lighting ray points at the sun, and is zero if it hits an opaque block, and samples a sun texture if it reaches out of bounds or somewhere in between for other situations.

For water, we trigger a reflection ray which is functionally the same as a camera ray without the ability to re-reflect.

If we are in a transparent block (water or air), we step forwards some distance \(h\), and apply an optional volumetric effect dependent on the step size. This volumetric effect could be removing red light for each step in water, causing a deep blue colour for large bodies of water. The air has a slight volumetric whitening to simulate fog capturing light.

We could in theory do a lighting ray for each of these volumetric steps for true volumetric lighting, but this would be highly expensive.

Entities

Rudimentary arbitrary entity rendering is captured with an SDF. Entities are defined as a group of shapes that are not attached to the voxel grid, but do occupy some space in the world. The voxel raymarcher is informed of the possibility of arbitrary objects inside a voxel by a flag that is set by the CPU in the voxel data structure. When in a voxel that could contain an entitiy the resolution of the marching is increased to allow for high resolution entity rendering.

Demo

Some youtube videos of the development